This practical guide explains how recruiters can move beyond ATS-centered hiring by adding AI decision intelligence, reducing screening noise, and improving hiring outcomes while staying in control.

How AI Reduces Early-Stage Hiring Bias

AI bias reduction in hiring is not achieved through better intentions or stricter policies. It is achieved through structural change in how early-stage hiring decisions are made.

At the top of the hiring funnel, resumes are evaluated under high volume, limited time, and incomplete information. Decisions are made quickly, often before interviews or assessments occur, and those decisions determine who advances and who is permanently excluded. At this stage, even minor inconsistencies can lead to systematic bias. This is why reducing the bias with AI has become a priority for organizations seeking fairness at scale.

AI-driven hiring practices focus on replacing subjective interpretation with objective hiring decision-making. Instead of relying on human shortcuts or familiarity signals, AI introduces consistent evaluation logic that applies the same criteria to every applicant. This shift enables unbiased candidate evaluation by controlling how resumes are assessed, ranked, and shortlisted before human judgment is reintroduced.

This article explains how AI reduces early-stage hiring bias by examining the technical and operational mechanisms behind fair hiring, including how AI-based methods eliminate resume screening bias and support unbiased resume screening through structured, auditable processes.

How Bias Enters Early-Stage Hiring Decisions

Bias enters early-stage hiring through inconsistency, not intent.

When resumes are screened manually, recruiters must manage volume by relying on shortcuts. Job titles, employer brand, education history, career continuity, and resume formatting are used as proxies for capability because they are faster to assess than actual relevance. These signals influence outcomes before skills and potential are meaningfully evaluated, undermining an unbiased candidate audit.

The issue is structural. Each resume is reviewed in a different context, by different reviewers, under varying levels of fatigue and urgency. There is no enforced baseline, no consistent scoring framework, and no reliable audit trail explaining why one candidate advanced while another did not. Once a resume is rejected at this stage, that decision is rarely revisited.

This is the core reason bias mitigation in screening is difficult without automation. Without controlled evaluation logic, early-stage decisions remain vulnerable to proxy-based judgment. Using AI to ensure fair candidate shortlisting requires systems that standardize evaluation criteria and apply them uniformly across the entire applicant pool, creating a foundation for objective, repeatable screening decisions.

The Structural Bias Problem in Manual Screening

Manual resume screening lacks three elements required for bias control: consistency, isolation of variables, and auditability. Each resume is evaluated differently depending on the reviewer's context, timing, and workload.

Because early-stage rejections are rarely reviewed, the bias introduced at this stage becomes permanent. Once filtered out, candidates are excluded from all downstream evaluation, regardless of actual capability

The Bias-Reduction Mechanisms Used by AI Screening Systems

Reducing hiring bias with AI depends on structured decision logic that applies the same standards to every candidate, regardless of background or resume format. This is the heart of the retargeting.

Objective Criteria Enforcement

AI reduces bias by enforcing consistent evaluation criteria across all candidates. Every resume is assessed against the same predefined skill and role-relevance signals, eliminating variation caused by human fatigue, preference, or familiarity.

Proxy Signal Deprioritization

Bias often enters through proxy signals such as school names, company brands, employment gaps, or resume formatting. AI systems reduce bias by deprioritizing these signals unless they are explicitly job-relevant, shifting evaluation toward evidence of capability rather than background similarity.

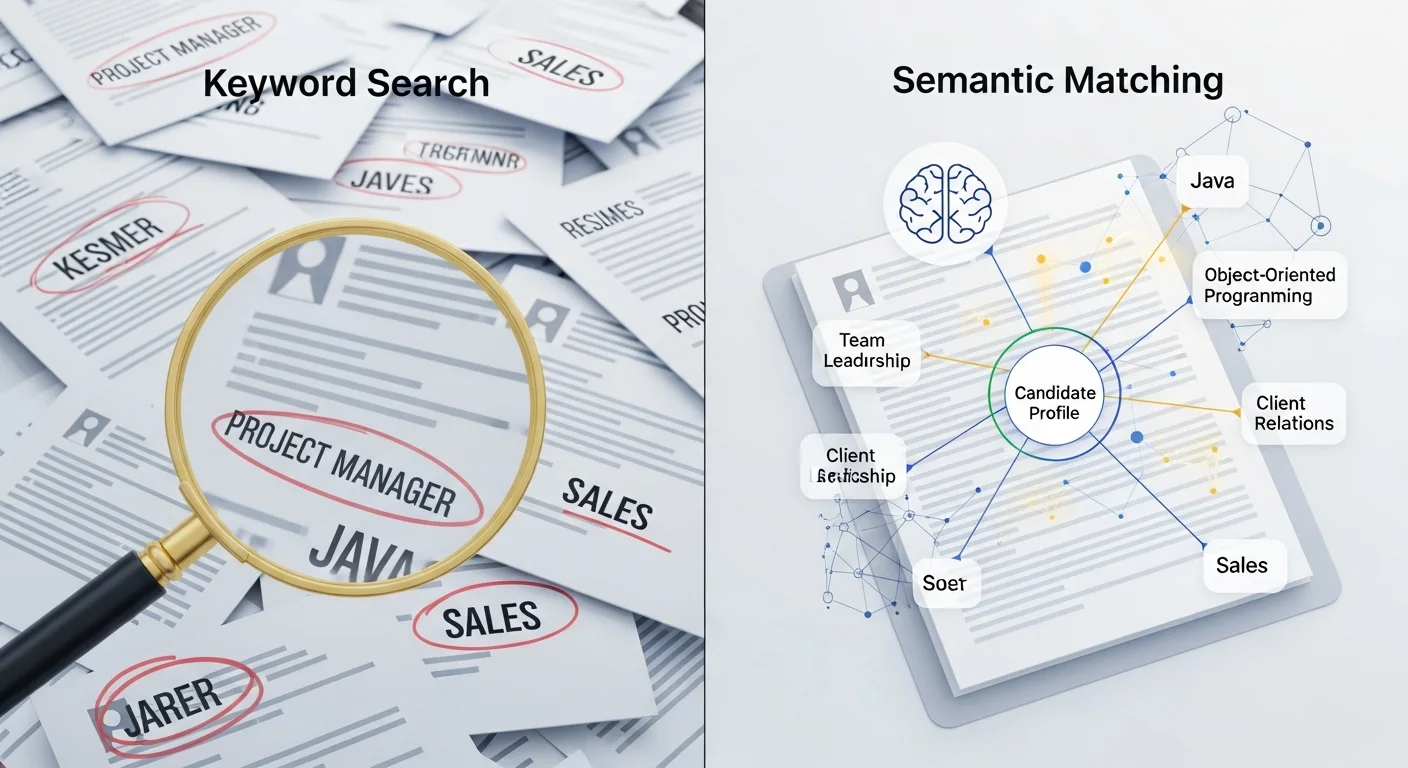

Contextual Skill Interpretation

Rather than relying on exact keyword matches, AI interprets experience contextually. This allows candidates with equivalent capabilities but different terminology or career paths to be evaluated fairly, reducing bias against non-linear or non-traditional backgrounds.

Consistent Ranking and Thresholding

AI applies the same scoring logic and advancement thresholds across the entire applicant pool. This consistency prevents bias caused by changing standards throughout the day, week, or hiring cycle.

Studies referenced by Harvard Business Review often emphasize that structured evaluation consistently outperforms unstructured judgment. AI simply enforces structure at a level humans cannot maintain manually.

Continuous Fairness Monitoring

Continuous monitoring for bias is a distinct advantage of AI over manual screening. Leading platforms establish rigorous, transparent fairness metrics that track real-time candidate selection rates across demographic cohorts (where permitted by law).

AI systems that support fair hiring provide explainable scoring, showing which skills influenced ranking decisions and how candidates were evaluated relative to role requirements.

If the system detects that candidates with certain characteristics, even those inferred from non-PII data, are being disproportionately ranked lower, the system flags the variance for human review. By establishing AI-driven fair hiring practices and comparing selection rates across cohorts, we ensure that the AI is not just fast but fundamentally fair.

Related Read: How AI Improves Resume Screening Accuracy Compared to Manual Review

The Shift to Transparent, Fair Hiring Algorithms

The most significant advancement in this area is the strategic design of fair hiring algorithms. We understand that AI trained on historically biased data (e.g., resumes from a male-dominated engineering department from 1990 to 2020) will replicate and even amplify that bias. This shift involves moving away from predictive models (which predict who succeeded in the past) to prescriptive models (which determine who is most capable of succeeding in the future).

Where Bias Still Creeps In (and How AI Addresses It)

It’s important to be honest. AI does not magically eliminate bias by default. Poorly designed systems can reinforce it. Bias can enter through training data, flawed job requirements, or unexamined success criteria. If job criteria are poorly defined or historically biased, automation can reinforce flawed assumptions. Bias reduction depends on clearly defined role requirements, balanced training data, and continuous monitoring.

The difference is visibility. AI systems make patterns measurable. When bias exists, it can be audited, tested, and corrected. Manual screening offers no such transparency.

This is why modern conversations focus on bias mitigation in talent screening, not blind automation. Responsible AI exposes decision logic instead of hiding it.

AI reduces bias when it is used as a structured decision-support system, not as an unquestioned decision-maker.

AI and Transferable Skill Recognition

One of the most overlooked sources of bias is rigid role matching. Candidates who don’t look traditional are often filtered out, even when they possess highly relevant transferable skills.

AI excels at identifying these hidden connections. It recognizes when experience in one domain translates meaningfully to another. This capability dramatically expands access to opportunity.

When transferable skills are recognized early, diversity improves naturally. Bias fades not because it’s suppressed, but because relevance becomes clearer.

How AICRUIT Approaches Bias Reduction Differently

AICRUIT was built around one core principle: fairness requires explainability. The platform doesn’t just rank candidates; it shows why they rank where they do.

Instead of opaque scores, AICRUIT provides insight layers that explain skill alignment, strengths, and gaps. This allows hiring teams to validate decisions rather than blindly trust them. It also enables continuous refinement of evaluation criteria.

AICRUIT’s design aligns with the philosophy behind AI Candidate Screening, where discovery focuses on potential, not just pedigree.

How HR Teams Can Use AI to Reduce Bias Early

One can realize the true value of AI-based screening when one follows a strategic playbook.

Step 1: Audit and Balance Your Training Data

This is the single most critical step in how AI reduces bias in early-stage hiring. Since AI learns from data, if your historical hiring data is skewed (e.g., 90% male engineers), the AI will learn to prefer male candidates. The solution is to use curated, balanced, and diverse training datasets that represent the workforce you want to build, not the one you historically had. This proactive data hygiene prevents the perpetuation of past inequities.

Step 2: Define and Prioritize Objective Skills

Before the AI even touches a resume, you must define the job in terms of verifiable skills and competencies, not credentials. What matters more: a Master's degree, or proficiency in the top three software tools required for the job? By focusing on a competency-based evaluation, you set the rules for using AI to ensure fair candidate shortlisting. This clarity forces both the machine and the human to focus on capability.

Step 3: Implement Transparent, Redacted Screening

Deploy an AI system, like AICRUIT, that redacts all protected characteristics during the initial review phase. This is the operational core of bias-free talent screening. The system should present the recruiter with a ranked, redacted shortlist accompanied by an objective alignment score and a clear rationale for that score. The human element is reintroduced only at the point of the shortlist, minimizing the influence of unconscious bias on the mass screening process.

Step 4: Continuous Monitoring and Human Oversight

AI systems are not "set it and forget it." To maintain ethical behaviour AI in recruitment, you must continuously monitor the system's output. Are certain demographic groups consistently ranked lower, even after anonymization? This could indicate a subtle proxy bias (where the AI infers a protected trait from an innocuous detail like a specific regional school). Human oversight and continuous audit cycles are essential to catch and correct these emerging biases, ensuring the technology remains a force for fairness.

Step 5: Leverage AI for Discovery of Transferable Skills

One of the most exciting aspects of AI-based methods to eliminate resume screening bias is the ability to detect transferable skills. The AI can identify that a candidate who managed a complex logistics system in the military possesses high-level "Operations Management" and "Crisis Management" skills, even if they lack a civilian MBA. This capability actively promotes diversity by validating the skills of candidates from non-traditional or undervalued career paths.

Conclusion

The fear that AI will automate bias is valid only if we choose to deploy it thoughtlessly. The reality of 2025 is that bias reduction in hiring by using AI is our most viable path toward building truly meritocratic organizations. Humans are prone to cognitive shortcuts; machines, when programmed ethically and monitored transparently, are not.

Fairness Is a System, Not a Statement

Hiring bias isn’t solved with good intentions. It’s solved with systems that consistently reward relevance over familiarity. AI doesn’t eliminate human responsibility, but it creates a fairer starting line.

When implemented thoughtfully, AI reduces bias where it matters most: at the moment decisions are made quickly, quietly, and at scale. Early-stage hiring no longer needs to rely on intuition alone.

By implementing sophisticated platforms like AICRUIT, you are making a powerful statement that your company values diversity over comfort and fairness over fatigue. Aicruit provides clarity, consistency, and an explainable evaluation. It doesn’t promise perfect hiring. It delivers fairer hiring, and that is how real change begins.

Book a 30-minute demo and see how AI-powered recruiting can help you find the right talent faster, without the guesswork.