This practical guide explains how recruiters can move beyond ATS-centered hiring by adding AI decision intelligence, reducing screening noise, and improving hiring outcomes while staying in control.

.webp)

AI Recruiting ROI: How to Measure It with the Right Metrics

Many hiring leaders feel confident about the return on their recruiting investments. That confidence often disappears the moment a CFO asks a simple question: “Where did this AI actually make us money?” In leadership meetings and budget discussions, AI recruiting ROI is often discussed with certainty, yet rarely supported by data that holds up when finance teams ask where the value actually shows up.

For years, Talent Acquisition teams have struggled to justify technology spend with clear evidence. The business case was usually built on benefits like better candidate experience, smoother workflows, or reduced administrative effort. These improvements matter to recruiters, but they are hard to translate into financial impact. As a result, recruiting investments are often seen as necessary expenses rather than measurable contributors to business performance.

The core problem is not missing data. Modern hiring systems generate more data than ever. The real issue is how ROI is measured. Too often, recruiting ROI is judged by feature usage, automation levels, or surface-level efficiency gains. When leadership, finance, or procurement ask harder questions, these metrics quickly fall apart.

Another challenge is timing. Recruiting ROI is usually evaluated too late in the process. By the time hiring results are visible, such as accepted offers or new hires, the most important decisions have already been made. At that point, it becomes difficult to attribute outcomes to specific actions or improvements.

Early-stage screening is not just important; it is decisive. It determines who enters the hiring funnel, how quickly candidates move, and how much downstream effort is wasted or saved. Because these decisions happen before interviews begin, their impact compounds quietly.

Errors made here cannot be fully corrected later, but improvements made here multiply across time, cost, and quality outcomes. This is why AI screening ROI can only be measured clearly when leaders focus on early-stage screening.

What ROI Means in Recruiting (Not Software ROI)

Recruiting ROI should not be confused with the return of a general SaaS investment. In practice, recruiting ROI metrics focus on how early hiring decisions affect cost, time, quality, and risk, rather than system usage or automation levels. Most software ROI is calculated by simple subtraction: Does the tool save more money than the license fee costs?

The return is defined by how reliably early evaluations produce candidates who progress efficiently and successfully. That return shows up as improved efficiency, stronger candidate alignment, and reduced exposure to inconsistent decision-making. For example, a $20,000 AI tool that saves $25,000 in recruiter hours has a positive ROI, technically. But if that same tool helps you hire a top 1% engineer who generates $1M in revenue, the ROI is exponential, not incremental.

AI screening ROI is a composite metric. It must be framed as the combined effect of:

- Efficiency: how quickly and consistently candidates move through the early stages

- Quality: how accurately qualified candidates are identified

- Risk reduction: how reliably decisions can be defended and repeated

This framing moves ROI out of subjective territory and into measurable performance.

How to Calculate AI Recruiting ROI (A Practical Model)

Recruiting ROI does not require complex financial modeling. At its core, resume screening ROI measures whether improvements in early candidate evaluation reduce cost, time, and risk more than the AI investment itself.

A simplified formula looks like this:

Recruiting ROI = (Screening cost reduction + Time-to-hire gains + Risk reduction value) − AI investment

For example:

- Reduced screening hours lower recruiter labor costs

- Faster shortlists reduce vacancy-related opportunity costs

- Fewer poor shortlists reduce interview waste and mis-hire risk

This model allows ROI to be measured consistently across roles, quarters, and hiring volumes.

To make this tangible, consider a high-volume hiring team reviewing 3,000 resumes per year. Before AI adoption, recruiters spent an average of 20 minutes reviewing each resume. That equates to roughly 1,000 hours of screening time annually. At a conservative, fully loaded recruiter cost of $60 per hour, screening alone costs approximately $60,000 per year.

If AI-assisted screening reduces review time by just 50%, the organization recovers 500 hours of recruiter capacity. That represents $30,000 in direct labor savings, before accounting for faster shortlists, reduced interview waste, or shorter vacancy durations.

When these early-stage gains are applied across multiple roles and hiring cycles, ROI compounds quickly. The financial impact becomes measurable and defensible, exactly what leadership expects when evaluating AI recruiting investments.

The Measurement Framework That Makes ROI Defensible

A defensible recruiting ROI framework rests on three outcome-driven pillars: cost efficiency, time efficiency, and talent quality combined with fairness. Every metric you track should roll up to one of these categories, forming a clear set of AI recruiting metrics tied directly to cost, speed, and hiring outcomes.

Together, these pillars create a balanced, outcome-driven view of recruiting ROI, focused on what should be measured, not how tools operate.

Cost per Hire Starts at Screening, Not at Offer

Cost per hire is often discussed as a single number, but it is best understood as a collection of controllable inputs. These include recruiter time, screening effort, rework caused by poor shortlists, and delays created by inefficient early decisions. One of the clearest financial signals comes from examining Cost-per-Hire Benchmarks, especially when compared before and after changes in early-stage screening decisions.

Among these factors, screening labor is the largest and most adjustable cost driver. Time spent manually reviewing resumes, revisiting earlier decisions, or compensating for weak shortlists accumulates rapidly across open roles. When screening is inefficient, costs grow quietly through repeated reviews, weak shortlists, and delayed decisions.

Improved screening efficiency affects cost per hire by:

- Reducing the time spent per candidate evaluated

- Minimizing re-screening caused by inconsistent decisions

- Shortening vacancy duration, which lowers opportunity cost

These changes drive recruiting cost reduction not through headcount cuts, but through lower friction, fewer errors, and faster decisions. Recruiters require fewer resumes to be re-reviewed, fewer candidates are advanced by mistake, and fewer vacancies remain open longer than necessary. These changes lower the cost per hire in ways that can be observed across roles and time periods.

Time to Hire Is Shaped Before Interviews Begin

Time to hire is often treated as a single metric, but it is influenced by multiple stages. The most critical distinction is between time to shortlist and total time to hire. If qualified candidates are identified quickly, the rest of the process accelerates naturally. If screening stalls, no amount of interview optimization can recover lost time.

These decisions determine how soon candidates are engaged and how likely they are to stay engaged. Delays at this point cascade into interview scheduling, candidate drop-off, and offer timelines. Improvements later in the funnel rarely compensate for early bottlenecks.

ROI in AI recruitment shows up in time metrics when:

- Shortlists are produced faster and with less variability

- Qualified candidates are engaged earlier

- Hiring momentum is sustained across multiple roles

The impact compounds as hiring volume increases. Small reductions in early-stage time create significant gains when applied across dozens or hundreds of requisitions.

Explore our guide on time-to-hire improvement metrics for more insights.

Measuring DEI Impact Without Guesswork

Diversity, equity, and inclusion outcomes are often discussed qualitatively; however, they cannot be evaluated meaningfully through sentiment or intent alone. To assess DEI impact as part of recruiting ROI, it must be measured quantitatively.

The earliest hiring decisions shape DEI outcomes more than any later stage. When screening criteria are applied inconsistently, subjective signals influence who advances. Structured screening affects DEI outcomes by introducing consistency into early decisions. When evaluation criteria are applied uniformly, the influence of subjective proxies is reduced.

Metrics that tend to change when screening becomes more consistent include:

- Shortlist diversity, measured by representation at the first decision point

- Progression rate parity, comparing advancement rates across candidate groups

From an ROI perspective, DEI outcomes are best understood as risk indicators. Inconsistent screening decisions increase exposure to compliance challenges, audit difficulty, and reputational risk. When early-stage evaluation becomes structured and repeatable, organizations reduce not only bias, but also the operational and legal risks associated with subjective hiring decisions.

Quality Signals That Strengthen ROI Analysis

While cost and time are foundational, several quality metrics strengthen ROI analysis when used correctly.

1. Shortlist Relevance (The "False Positive" Rate)

Shortlist relevance measures how closely shortlisted candidates align with role requirements, reducing downstream interview waste. It addresses the question: How many candidates sent to the hiring manager are rejected for basic missing skills?

- Metric: % of candidates rejected at "Hiring Manager Review" stage.

- Goal: Decrease this percentage. If AI is working, the hiring manager should rarely see an unqualified candidate.

2. Interview-to-Offer Ratio

Interview-to-offer ratios indicate whether early screening decisions are producing candidates who can realistically convert.

This is the ultimate efficiency metric. It measures the density of talent in your pipeline.

- Formula: Total Interviews Conducted / Offers Extended.

- Pre-AI Benchmark: Industry average is often 5:1 or 6:1.

- AI Goal: Drive this down to 3:1.

- ROI Logic: Conducting fewer interviews per hire saves massive amounts of high-cost engineering or leadership time.

3. False Negatives (Hard to Measure, But Critical)

False negatives represent qualified candidates rejected early. These losses are rarely visible but have long-term cost implications.

This requires periodic auditing. Are you rejecting great candidates?

- Method: Randomly audit the "Rejected" pile. If you find qualified candidates that the AI rejected, your "Quality ROI" is suffering. A well-tuned AI should have a lower false-negative rate than a tired human recruiter.

These signals should reinforce ROI conclusions, not replace them. They provide context that explains why cost and time metrics improve or deteriorate.

What Should Not Be Used to Prove Recruiting ROI

Not every metric that looks impressive actually proves return on investment. In recruiting, many commonly cited numbers describe activity, not impact. While these metrics may be useful for internal operations, they fail to explain whether hiring decisions are improving or whether business outcomes are changing. Relying on them can create confidence without evidence.

Here are three metrics that people often confuse with ROI indicators, but they really don’t hold up when you take a closer look.

Resume Volume

Resume volume measures how many applications or resumes flow through the system. It reflects scale, not quality.

The common trap sounds like this: “We processed 50,000 resumes this year.” On the surface, this feels like progress. In reality, processing more resumes faster does not automatically create value.

The reason this metric fails is simple. Volume says nothing about decision quality. If an organization reviews 50,000 resumes but only makes a handful of hires, the process is inefficient regardless of how advanced the tooling is. Speed without selectivity only amplifies noise. Recruiting ROI is not about how much input you handle, but how effectively that input turns into successful hires.

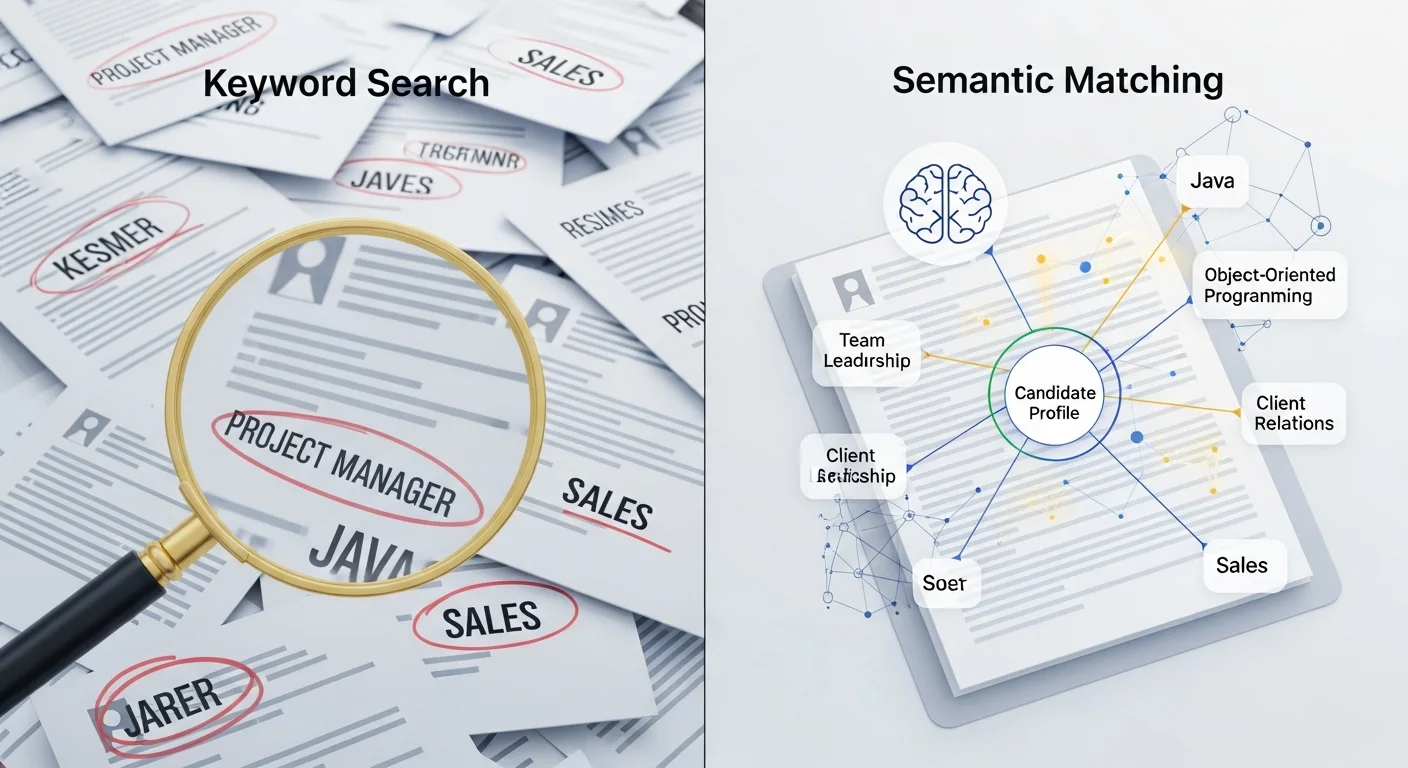

Keyword Match Rates

Keyword match rates measure how closely a resume’s wording aligns with predefined terms. They describe text similarity, not candidate capability.

The typical trap is: “This candidate is an 85% keyword match.” While the number sounds precise, it hides a major flaw.

Candidates can include keywords without having real experience, and strong candidates may describe their skills differently. A high match score does not guarantee performance, just as a lower score does not indicate a poor fit. When match rates are presented without linking them to outcomes, such as interview success or hiring decisions, they become misleading.

Standalone “Accuracy” Claims

Accuracy is one of the most misused terms in recruiting technology. When presented without context, it has little meaning.

The trap usually sounds like: “The AI is 95% accurate.” The immediate question should be: accurate at what?

Accuracy can refer to parsing resumes, extracting fields, ranking candidates, or predicting success. Unless accuracy is tied to a clear business outcome, such as “95% of candidates recommended at the screening stage passed the first interview,” the number provides no insight into hiring quality or ROI.

Relying on these signals creates confidence without evidence. Resume volume, keyword match rates, and vague accuracy claims may look impressive in presentations, but they do not explain whether hiring is faster, better, or less risky. They describe activity, not impact.

To maintain credibility with leadership, recruiting ROI must be grounded in metrics that connect directly to outcomes. Vanity metrics may simplify storytelling, but they have little correlation with real business value.

Establishing a Baseline Before AI Adoption

Effective hiring ROI measurement requires a baseline. Measuring improvement is impossible without understanding pre-adoption performance.

Before adopting AI recruiting tools, teams must document:

- Average screening time per role

- Cost per screened candidate

- Shortlist quality indicators

- Progression rates from screening to interview

- Interview-to-offer ratio

- Shortlist rejection rate

Claims of ROI become narratives rather than measurements. If you skip this step, you will never be able to prove ROI. You will only be able to say, "It feels faster," which is not enough to justify a renewal budget next year.

Baseline discipline is what separates defensible ROI analysis from anecdotal success stories.

Turning Metrics into Measurable ROI

Recruiting ROI becomes repeatable when leaders commit to measuring early-stage outcomes consistently over time. This does not require complex models or excessive reporting. It requires discipline in tracking the same indicators before and after changes are introduced.

When organizations treat screening as the foundation of hiring performance, ROI becomes easier to defend. Metrics replace assumptions. Patterns replace anecdotes. Decisions become easier to explain.

Operationalizing recruiting ROI requires systems designed around measurement discipline, platforms like AICRUIT are built around these principles rather than surface-level automation. Instead of optimizing for resume volume or keyword scores, the system focuses on early-stage decision quality, structuring screening criteria, enforcing consistency, and surfacing the metrics that actually connect to recruiting ROI. By making screening outcomes measurable and repeatable across roles and hiring cycles, it becomes easier to track where ROI is created and how improvements compound over time.

For teams exploring how these principles are applied in practice, recruiter productivity metrics and false negatives in hiring provide deeper and focused analysis.

Conclusion

Recruiting ROI must be evaluated through the lens of organizational scale and operating complexity, not as a universal framework, but as a system that matures with structure. At its core, recruiting ROI is not a belief, a feeling, or a marketing promise. It is the measurable outcome of early hiring decisions, tracked consistently and validated over time.

AI does not inherently produce positive ROI. Its impact is entirely dependent on the structure surrounding it. When job definitions are unclear, evaluation criteria are inconsistent, or baseline metrics are missing, AI simply accelerates flawed decisions rather than correcting them.

AI amplifies structure; it does not replace it. Without disciplined screening logic, transparent evaluation rules, and continuous measurement, ROI claims remain unreliable regardless of technological sophistication. This is why sustainable recruiting ROI is not driven by technology alone. It is driven by clarity, measurement discipline, and ongoing outcome validation. When those foundations exist, AI becomes a force multiplier. When they do not, ROI erodes quickly.

Book a 30-minute demo and see how AI-powered recruiting can help you find the right talent faster, without the guesswork.